Getting Started with Code Connect UI

Available on a Dev or Full seat on the Organization, and Enterprise plans

Requires a Figma library file with published design components

Code Connect UI lets you map design components in your Figma libraries to the corresponding code components in your repository. These mappings enhance the Figma MCP server by giving AI agents direct references to your code, enabling more accurate implementation guidance.

Connecting components from your design library

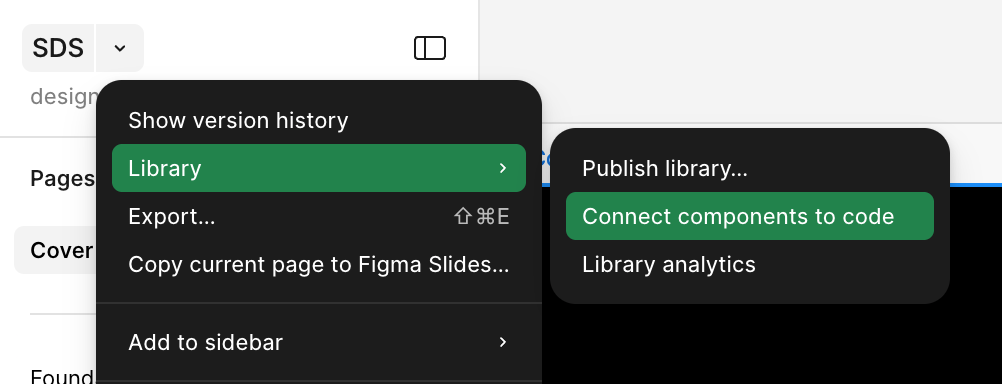

- In Figma, open a library file that contains design components.

- Switch to Dev Mode.

- From the dropdown menu next to the file name, choose Library → Connect components to code.

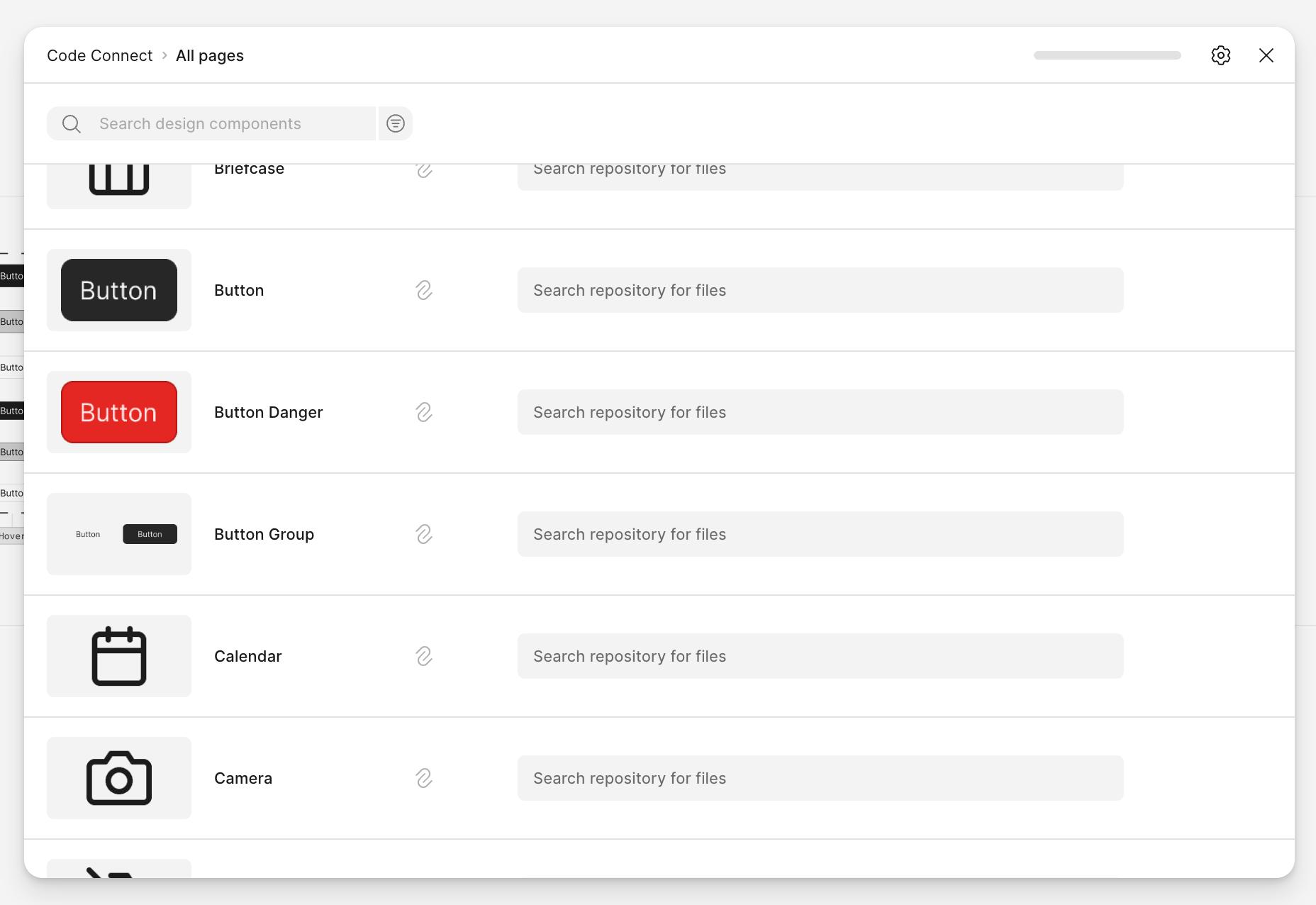

The Code Connect UI opens and lists all published components from the library. From here, you can begin mapping components to your codebase.

Connect your GitHub repository (optional)

Connecting to GitHub is optional. You can map your design system components to code paths manually without a GitHub connection.

Click the Settings icon in the Code Connect UI to connect your repository to GitHub.

Connecting to GitHub provides additional features:

- Mapping fields will autocomplete with file paths from your repository.

- You can browse and search for components directly from GitHub.

Manually connecting your components

Without a GitHub connection you can still create mappings by manually entering:

- The component path in your codebase (e.g.,

src/components/Button.tsx) - Optionally, the component name (e.g.,

Button)

All mappings are shared with the Figma MCP server. Whenever a mapped design component is used, its code context is included in the data sent to AI agents.

When working in your IDE, Code Connect can also provide inline suggestions (open beta) powered by the remote MCP server. These suggestions surface relevant component mappings and code updates in real time, helping you keep your design and code in sync.

Connect components from a selected frame

When you run the Figma MCP server on a selected frame, the quality of results depends on whether its design components are connected to code.

- If the frame contains unmapped components, the MCP server won't have complete context, and results may be less accurate.

- From Dev Mode's inspect panel, click Connect components to open the Code Connect UI. This allows you to connect missing components for the current frame.

- Once added, mappings are shared with the MCP server immediately and used as context, improving the accuracy of AI-generated results.

Add custom instructions for AI code generation

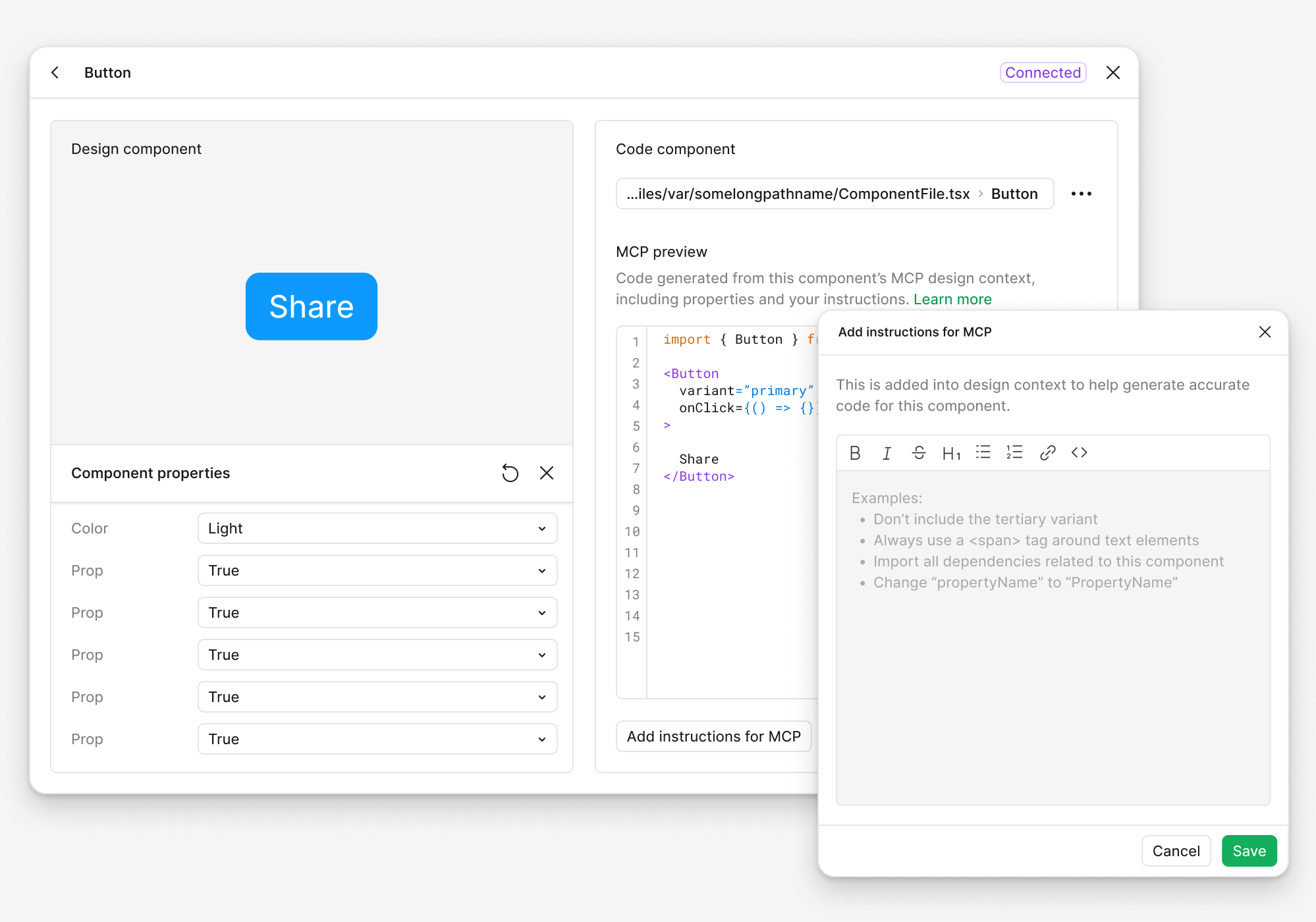

Once you've connected a component to your codebase, you can provide additional context to help AI agents generate better code. This is especially useful for components with specific usage patterns, accessibility requirements, or team conventions.

Add instructions for MCP

For any connected component, you can add custom instructions that will be used by your LLM via the Figma MCP server:

- In the Code Connect UI, select a connected component.

- Click the Add instructions for MCP button.

- Write user prompts that describe how the component should be used, including:

- Specific props or configuration patterns

- Accessibility considerations

- Common use cases or variations

- Team-specific coding conventions

These instructions are sent along with your component mapping to the MCP server, helping the AI generate code that better matches your design system's implementation.

Preview AI-generated code snippets

To verify that your mappings and instructions will produce the right code, you can preview AI-generated snippets directly in the Code Connect UI:

- Select a connected component in the Code Connect UI.

- Change the properties of the design component to test different configurations (e.g., different button sizes, states, or variants).

- View the code snippet preview to see what your LLM would generate based on:

- The component mapping

- Your custom MCP instructions

- The current property values

This preview helps you refine your instructions and ensure that the AI will generate appropriate code for all variations of your component.

The preview snippets are generated in a different context than when the MCP server responds to actual requests. As a result, the code generated in the preview may differ slightly from what your LLM produces during real usage, where it has access to your full conversation history and additional context.